Physics Driven Diffusion Models For Impact Sound Synthesis From Videos

Physics-Driven Diffusion Models For Impact Sound Synthesis From Videos | DeepAI

Physics-Driven Diffusion Models For Impact Sound Synthesis From Videos | DeepAI We design a physics driven diffusion model with different training and inference pipeline for impact sound synthesis from videos. to the best of our knowledge, we are the first work to synthesize impact sounds from videos using the diffusion model. We would like to thank the authors of diffimpact for inspiring us to use the physics based sound synthesis method to design physics priors as a conditional signal to guide the deep generative model synthesizes impact sounds from videos.

(PDF) Physics-Driven Diffusion Models For Impact Sound Synthesis From Videos

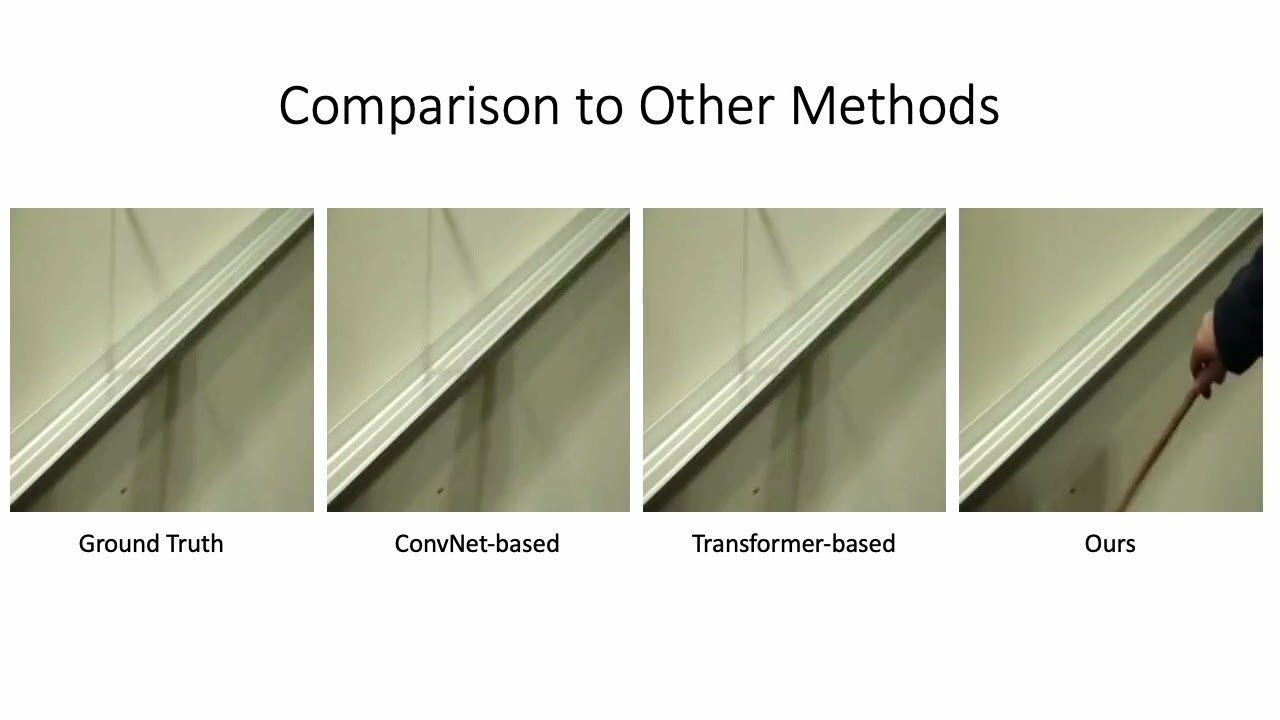

(PDF) Physics-Driven Diffusion Models For Impact Sound Synthesis From Videos Modeling sounds emitted from physical object interactions is critical for immersive perceptual experiences in real and virtual worlds. traditional methods of im. 文档 / physics driven diffusion models for impact sound synthesis from videos.pdf. In this work, we propose a physics driven diffusion model that can synthesize high fidelity impact sound for a silent video clip. A physics driven diffusion model is proposed that can synthesize high fidelity impact sound for a silent video clip and outperforms several existing systems in generating realistic impact sounds.

Table 1 From Physics-Driven Diffusion Models For Impact Sound Synthesis From Videos | Semantic ...

Table 1 From Physics-Driven Diffusion Models For Impact Sound Synthesis From Videos | Semantic ... In this work, we propose a physics driven diffusion model that can synthesize high fidelity impact sound for a silent video clip. A physics driven diffusion model is proposed that can synthesize high fidelity impact sound for a silent video clip and outperforms several existing systems in generating realistic impact sounds. The diffusion model combines physics priors and visual information for impact sound synthesis. experimental results show that the proposed model outperforms existing systems in generating realistic impact sounds while maintaining interpretability and transparency for sound editing. In this work, we propose a physics driven diffusion model that can synthesize high fidelity impact sound for a silent video clip. in addition to the video content, we propose to use additional physics priors to guide the impact sound synthesis procedure. In this work, we propose a physics driven diffusion model that can synthesize high fidelity impact sound for a silent video clip. in addition to the video content, we propose to use additional physics priors to guide the impact sound synthesis procedure.

CVPR 2023: Physics-driven Diffusion Models for Impact Sound Synthesis from Videos

CVPR 2023: Physics-driven Diffusion Models for Impact Sound Synthesis from Videos

Related image with physics driven diffusion models for impact sound synthesis from videos

Related image with physics driven diffusion models for impact sound synthesis from videos

About "Physics Driven Diffusion Models For Impact Sound Synthesis From Videos"

Comments are closed.